The next standard we’ll explore in our series on understanding WCAG 2.0 is 2.4.1, Bypass Blocks. This standard is required for WCAG level A compliance, which is part of what Section 508 requires. For the first time in this series, we’re exploring a standard that’s substantially equivalent to existing 508 standards (1194.22(o)). Here’s the complete text:

2.4.1 Bypass Blocks: A mechanism is available to bypass blocks of content that are repeated on multiple Web pages. (Level A)

The existing 508 standard is a little more specific, simply requiring a mechanism to bypass “repetitive navigation links”.

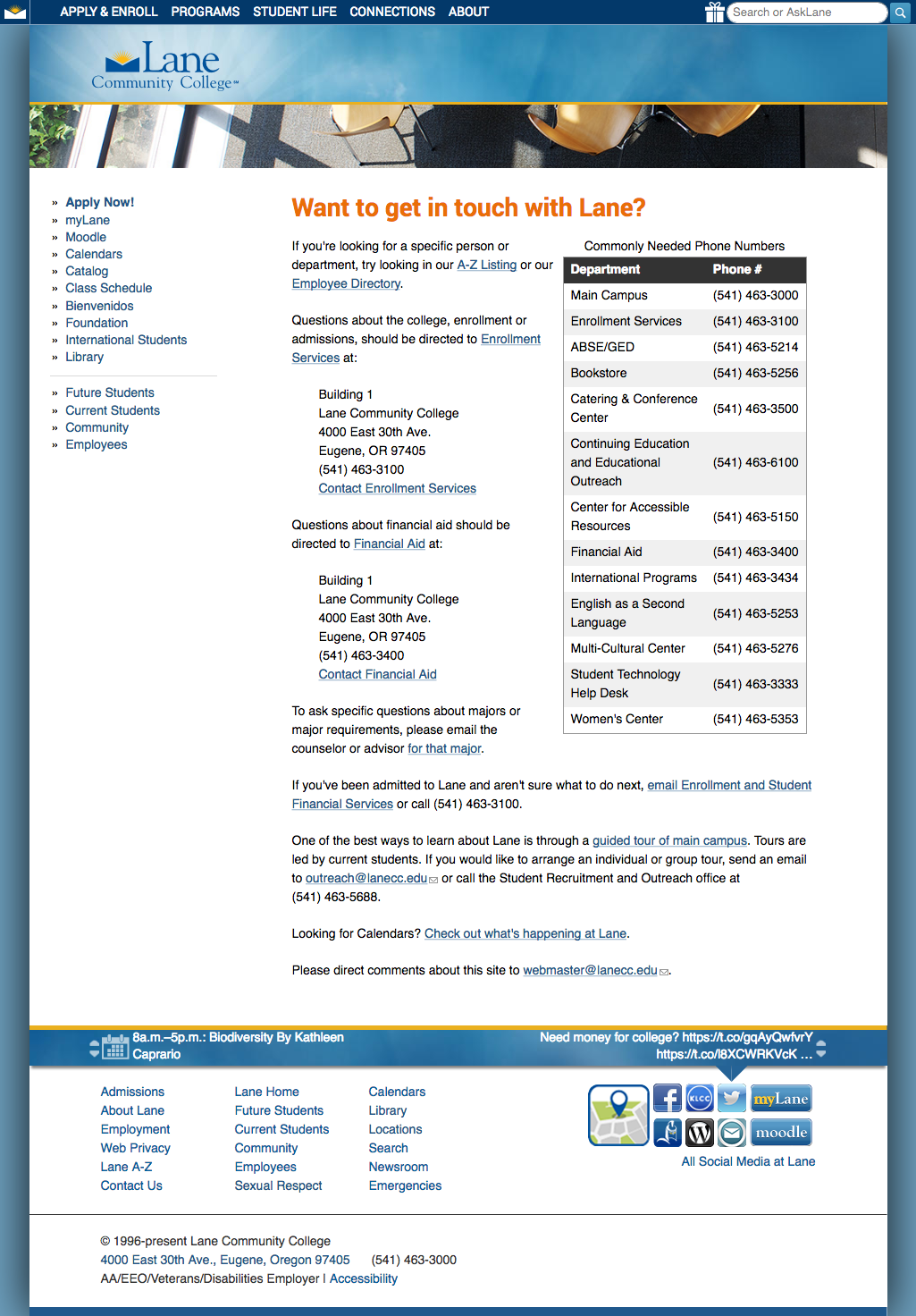

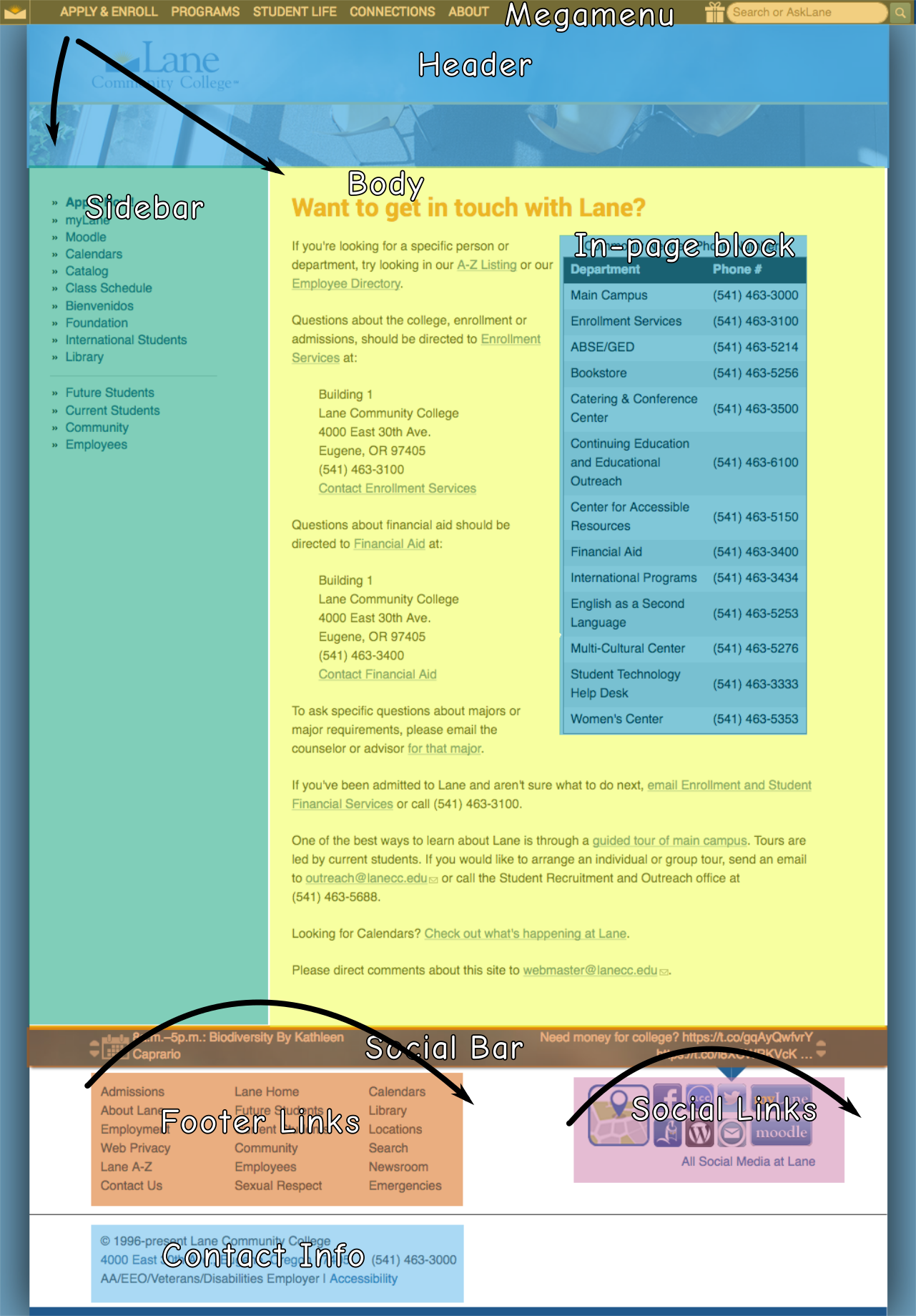

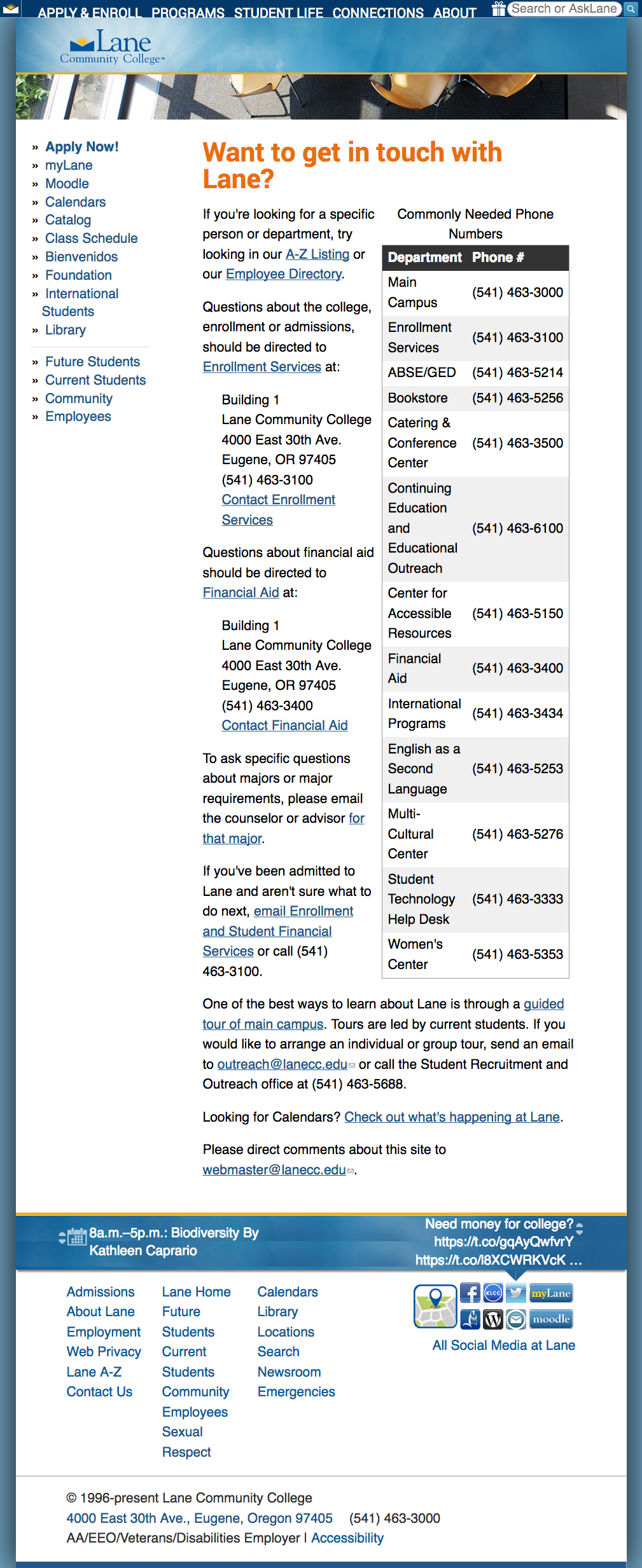

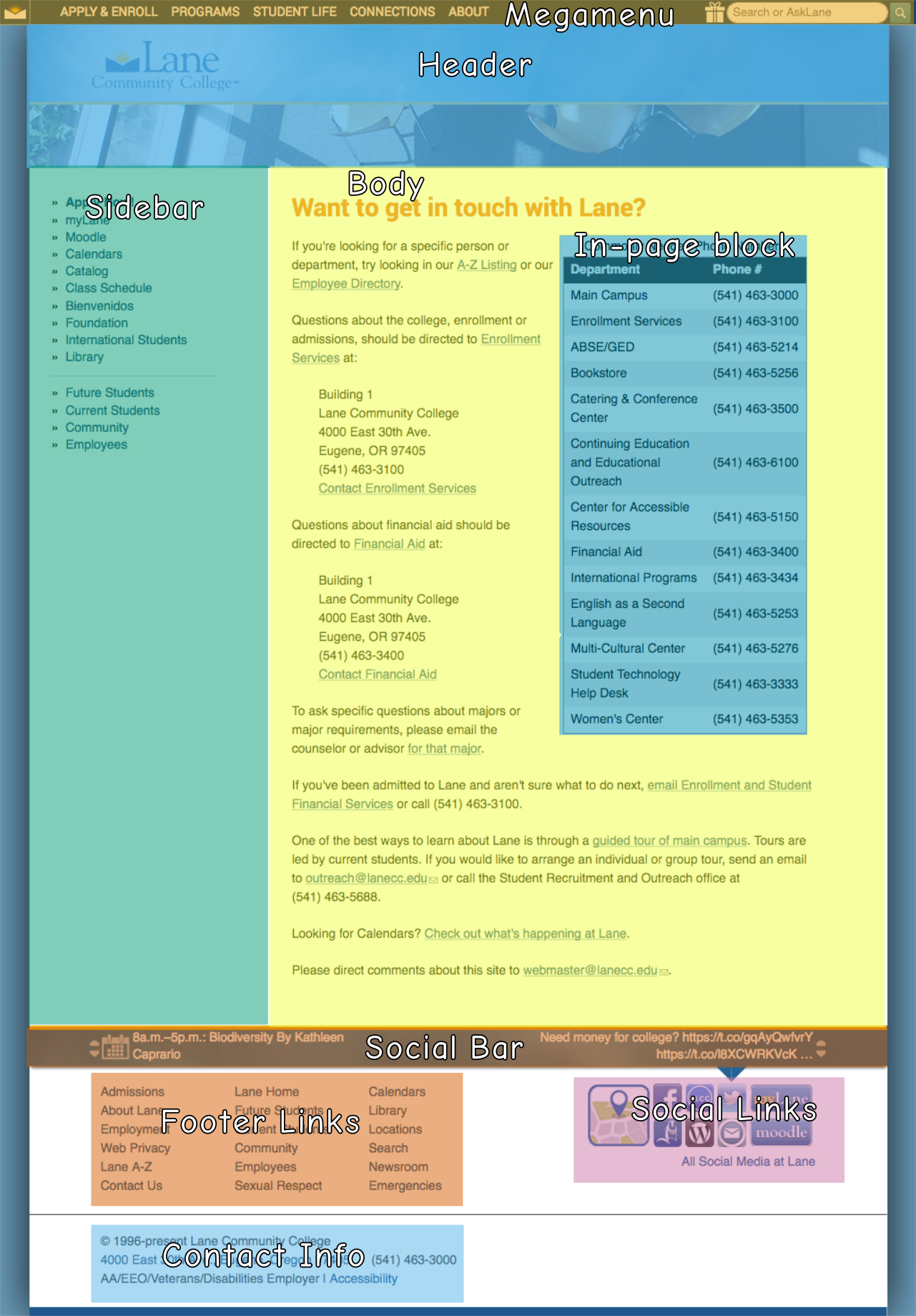

Let’s look at a screenshot of a page on the Lane website, and then break it into some blocks.*

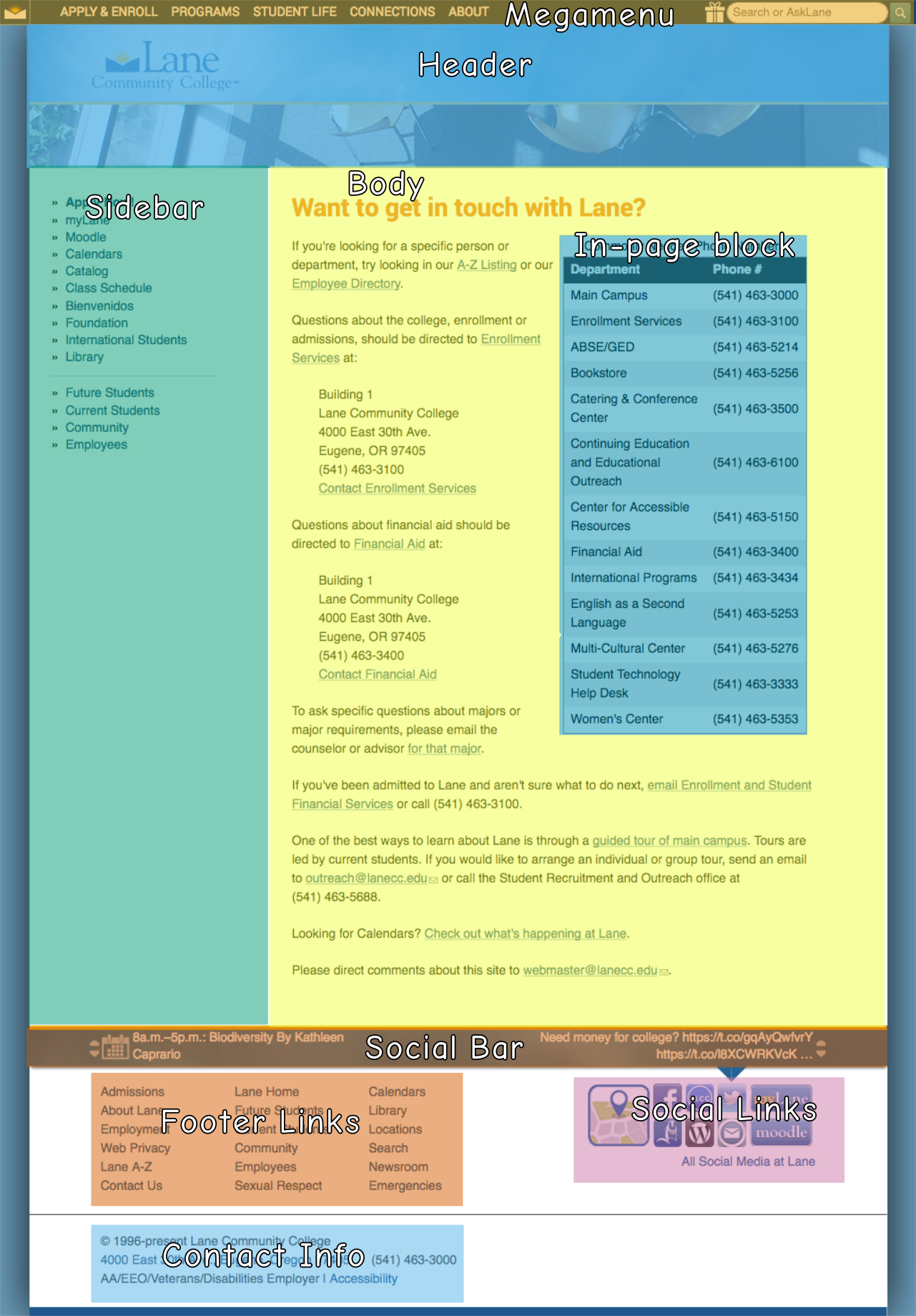

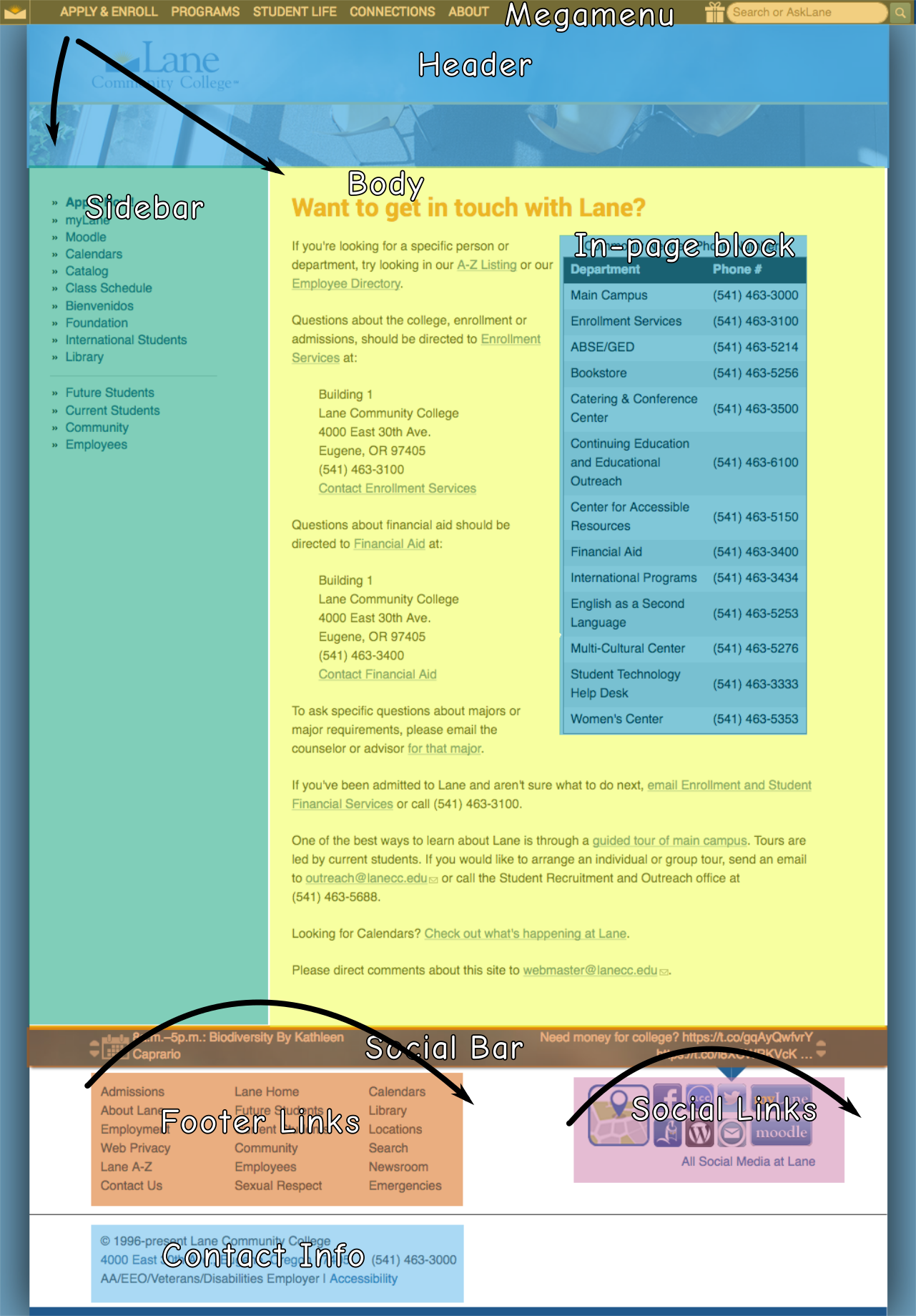

If we overlay this page with some blocks, we get something like this:

If you’re a sighted person, you can quickly skip over the repetitive blocks that appear on every page – you just move your eyes immediately to the start of the body. But if you’re dependent on a screen reader, you’re forced to read the entire page from top to bottom, with one notable exception, like this:

- Header

- Sidebar

- Body (including the in-page block)

- Social Bar

- Footer Links

- Social Links

- Contact Info

- Megamenu

Notice how the body is the 3rd thing on there? If you depend on a screen reader, you’ll be forced to hear those first three regions read to you on every single page. In our case, that could be well over a hundred links read to you before you get to the content you need.

And why’s the megamenu block read last, even though it’s at the top of the page? That’s an example of a block where the position in the code doesn’t match the position on the page. The megamenu appears to be at the top of the page, but it’s actually at the bottom of the code, meaning a screanreader reads it last. There’s some complex reasons for that, which would make this post unreasonably long, so we won’t go into them here.

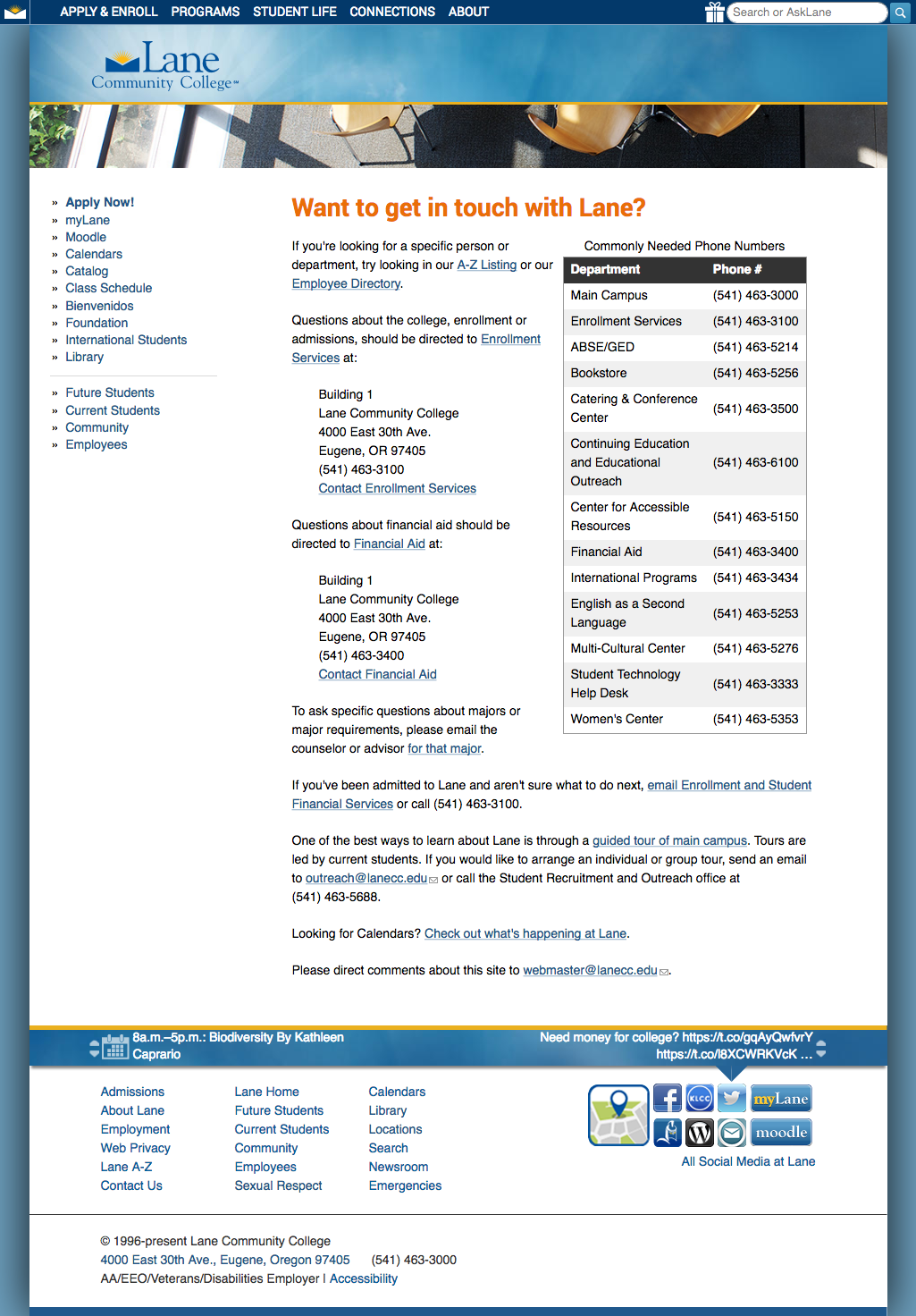

Section 508 helps out by requiring a way to skip repetitive navigation links. Usually this is done by creating a link on the text “skip to content” at the very top of the page, which links to an anchor mid page, right where the body is. To meet this 508 requirement, we put a pair of skip links at the top of all our pages:

- One that says “skip to navigation”, which skips directly to the sidebar

- One that says “skip to main content”, which skips directly to the top of the body

We also put two more in the footer, which let you skip over parts of the footer:

- One at the top of the footer links, which skips to the footer links

- One at the top of the social links, which skips to the contact info

There isn’t one to skip the megamenu, since it’s at the bottom of the page, so there’s nothing to skip to – this is a bit of an imperfect solution, but until we can fix it (See this issue to follow along), it’ll work.

Together, these skip links give people these shortcuts to skip parts of the page that appear all over the site:

Though it seems like the skip to navigation link is a bit silly (since it’s skipping nothing in that image), it’s actually important for other pages where we have links in the header (like our landing page).

Under WCAG, instead of just skipping repetitive navigation links, we also need to have a way to skip repetitive page blocks. And that means we need to consider content even at the paragraph level. So while the in-page block in our image isn’t a problem on the contact page, it would become a problem if it was on every page on the site, or even every page on a chunk of the site (like every page in the science department). Then we’d need a skip link.

I’ve seen repetitive text on our site in two places. First is departments that have a common paragraph or sentence they show on every page. For instance, a department might want to show their department vision on every page. That’s actually not ok – instead, they should have their vision just once on their landing page, or link to a page with their vision from their menu.

The second place is people who put lots of links that say “return to top”, which link to the top of the page. Strictly speaking, these aren’t really skip links, but they are completely unnecessary, and a holdover from an earlier time on the web. Screen readers and keyboards both have shortcuts to jump to the top of the page (it’s ctrl-home on a windows computer or cmd-up on a mac) – but most people just scroll or flick the page with their finger on a phone. There’s a place to use an in-page link to the top of the page, but those places are pretty rare.

If you’d like to read more about bypass blocks, you may also be interested in these techniques, which provides examples and more detail. Specifically, it includes discussion about about using aria-roles, which provide an easy way to identify page regions, and make it easier to navigate a page.

Interested in more? Check out the listing of all the posts in this series.

* The word blocks has a very specific meaning in Drupal, but that’s not how we’re using it here. As far as WCAG is concerned, blocks can refer to Drupal blocks, regions, zones, or even just paragraphs.