Back when we first started the website redesign, we received a lot of feedback about how our search engine – https://search.lanecc.edu – didn’t work very well. Now most of our questions are about altering the behavior of the search engine to make it work differently. Over the next few posts, I’d like to explore what changed, as well as why not every request can be responded to, as well as dig a little bit into what you, as a Drupal editor, can do to improve how the search engine views your pages.

How does search work at Lane?

We use a Google Mini search engine, which allows us to index and search up to 50,000 documents. We have complete control over what pages are in our search (the “index”), and limited control over what’s shown in the results. Also, it’s blue, which is a nice contrast to the beige and black of most of the machines in the data center.

The first big change we made to search as part of the redesign was to upgrade our Google Mini, which we did in early 2012, switching to a brand new search server with upgraded hardware and software. We found a pretty immediate improvement – no longer did it feel like using an old search engine from ’05 or ’06, and instead it felt like using one from ’10 or ’11. Unfortunately, Google has discontinued the Mini, and there will be no further upgrades. We’ll need to find a new solution in the future (Apache Lucerne?).

Along came a migration

Then we started migrating pages to Drupal. This brought with it a bunch of new practices that we’ll get into some other time, but all of which dramatically increased the relevance of search. The down side is that we changed virtually all of the URLs for pages on lanecc.edu (Yes Sir Tim, I know it was a bad idea). While we’ll hopefully never need to do this again, it meant there was some confusion in the results for a while.

The migration also meant that we cut a lot of pages. More than 10,000 of them. Enough pages that cutting them significantly changed how the mini calculated page rank. We’re still removing these from the search index. It’s a slow process, since we don’t want to delete more than one or two folders worth of files each day, so that if someone was still depending on a page or image that didn’t get migrated, it won’t be as hard to get that person their missing files.

Reset

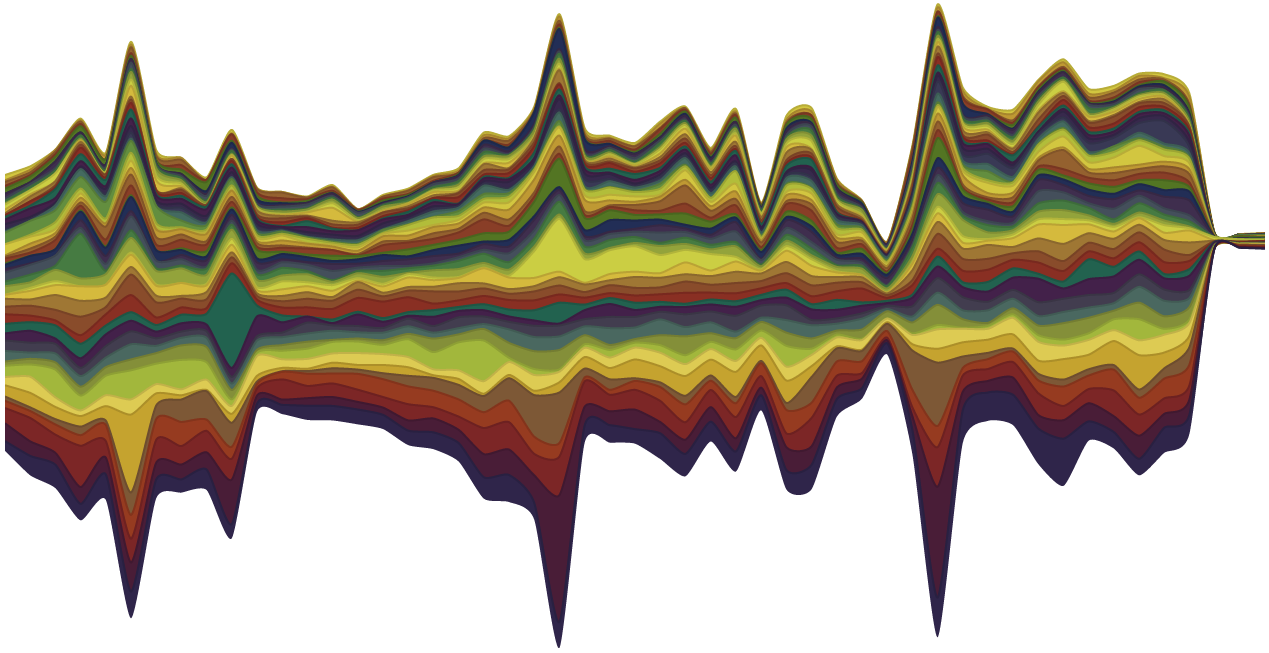

Since the mini wasn’t removing pages that had long since disappeared, we decided to reset our search index. This is pretty much what it sounds like. We tell the mini to forget about all the pages it knows, and start over from the beginning. When we did this last, around Thanksgiving, our document count in the index went from about 40,000 all the way down to 16,000. We think results improved quite a bit.

We’ll reset again around Christmas, which is traditionally one of the slowest days on the website. Hopefully that’ll bring the document count down even more, and make results even better.

Biasing

At the same time as our last reset, I figured out that I’d been using Results Biasing incorrectly. Results Biasing is a way that we an introduce rules into the search engine to influence the results. Our first rule tells the mini to significantly decrease the pagerank of urls that end with xls or xlsx, under the assumption that when you’re searching Lane, you’re probably not interested in some Excel sheet.

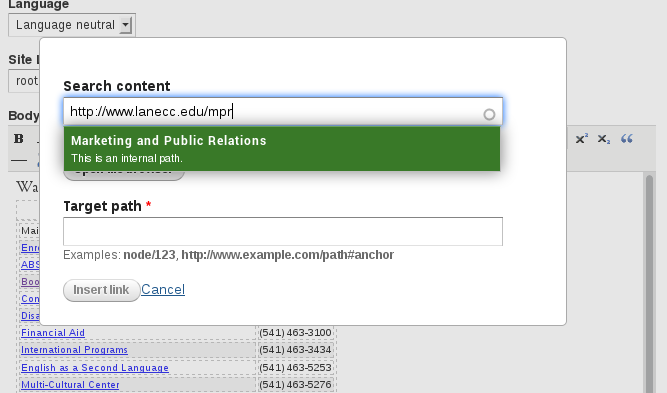

I thought that by simply entering rules in the Results Biasing page on the mini administrative interface, I was affecting the search results. Turns out this isn’t actually true. There’s a second radio button to hit on the Frontend Filter, where you actually enable Result Biasing on the collection that frontend is searching. What are containers and frontends? A container is a collections of urls that match certain patterns. For example, we have a container of just pages that match pages related to our COPPS pages, and another just for the Library. Frontends are the user interface to those collections, where you can customize what the search button says, what the background color of the results is, etc. You can use any front end with any collection, but in our case each frontend belongs to just one collection.

Feedback

The other big thing we did to our search was to add a feedback form in the bottom right hand corner of the search results page. To date we’ve had 40 people let us know about their searches that didn’t get them the results they needed. Many of those have resulted in us making a tweak to the search engine, either adding pages to the index, or adding a KeyMatch, or fixing something on the page to improve its visibility.

Feedback has started to taper some, while queries have stayed steady (if you adjust for changes in enrollment), so our assumption is that search is working pretty well. If it isn’t, please submit some feedback!

Next time

Now that we’ve got some basics out of the way, next time we can dig into how search engines calculate results (If you’d like some homework, here’s a bit of light reading), as well as more of what we can do to influence those results.