I know, I know, we’re in week 4, and I’m finally putting out my first post of the year. But it’s 2020. It’s that kind of year.

Last week the web team attended the 2020 HighEdWeb annual conference. This is one of my favorite conferences, and it was even better this year because it was absolutely free online (though in central time, which made for some early mornings). Here’s some gems:

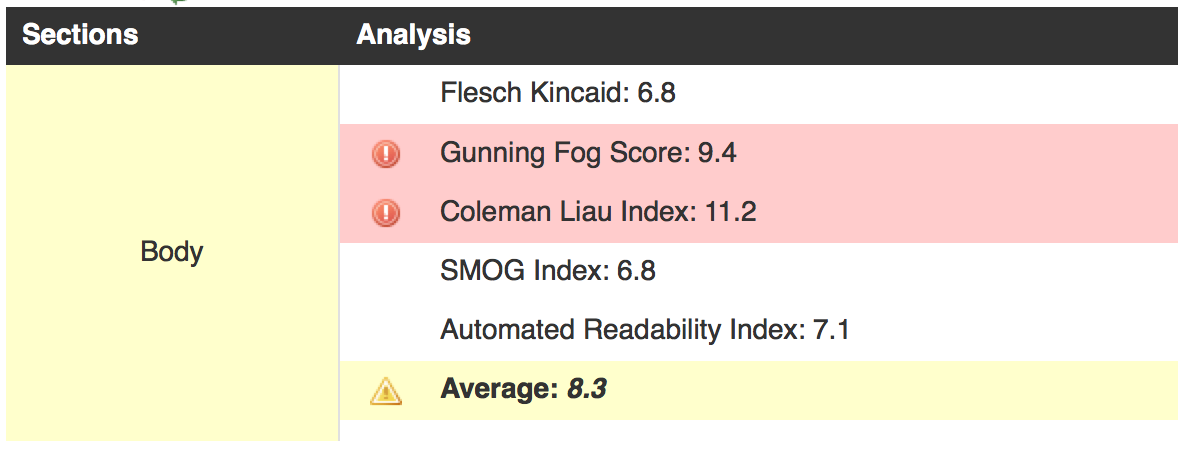

Plain Language Matters.

It’s an accessibility issue. It’s an equity issue. People skim online. If we make that hard for them, they leave. Take a look at our previous plain language post.

Resource pages & emails don’t work.

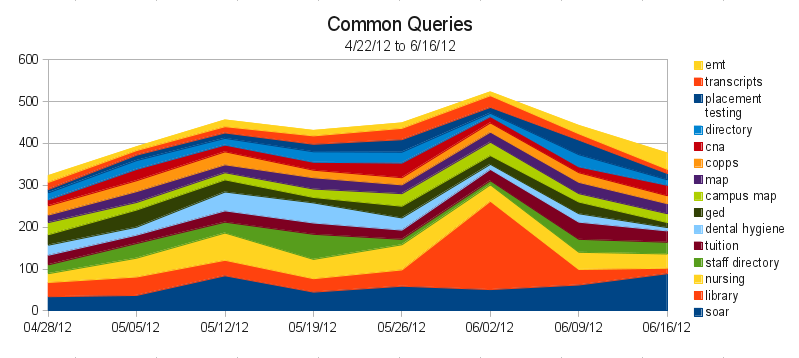

One of the groups at Miami University did a lot of extensive testing. People don’t self serve. If you have a lot of links and resources that you want to put out there, consider a drip campaign to slowly provide those links at a pace people can digest. Use social to highlight different resources at different times. Include titles that highlight the problem the resource solves (“Looking for a tutor late on a Sunday night before class? Look no further!”). Track what you do to see what works (and reach out if you’d like help setting that up!). Rather than a resource page, consider a blog, where those resource links can be provided in context, and do some content marketing for you. We’ve also covered resource pages in the past.

Stop posting flyers and event posters online.

Especially now, when we’re not going to see them in person. Flyers and posters are designed for print, and don’t translate well to digital. If you’re considering putting a flyer or poster online or on a digital sign, reach out – we’ll connect you with some graphic design resources, and help design for the medium you’ll be promoting on.

The college website is for prospective students. And those students know when they’re being marketed to.

Carlton did a great session where they detailed extensive user testing before their homepage redesign. They found things like:

- prospective students (particularly Gen Z) think the entire website is for them. Even the section clearly labeled “Alumni”. But your homepage should be for them before any other audience: most other audiences search for something, then land on some other page. Prospective students are the most likely, by far, to land on the homepage.

- they know when pictures are staged. They want to see people in place: shots that show what students actually do some place on your campus, and how that sets you apart. Person under a tree reading a book? Clearly staged. Dining hall shot? Every college has a dining hall. Candid shot of a class outside? Student learning to machine something? Much better.

- Carousels don’t work. I think one possible exception is a photo gallery, but that’s tricky.

- The large hero image on a program’s website sets the tone and creates a greater impression than all the text there.

- From one of their slides: “Students want #nofilter, but we’re giving them #fellowkids”

FAQs don’t work.

While we may think splitting our content up into questions is easier for the student, it actually makes things harder to understand. Read your entire FAQ page, make groups out of the content and write a header for each one, and then rewrite the content in each group to paragraph form. It’ll work better for everyone. Here’s a page with the slides, a sample FAQ with real life before and after examples, and some other resources for why we should stop using FAQ pages. You can also review our previous post on FAQs.